|

|

преди 3 години | |

|---|---|---|

| .. | ||

| LaTeX | преди 3 години | |

| chamfer_distance | преди 3 години | |

| data | преди 3 години | |

| jittor_code | преди 3 години | |

| model | преди 3 години | |

| README.md | преди 3 години | |

| basic_ops.py | преди 3 години | |

| config.yml | преди 3 години | |

| dataloader.py | преди 3 години | |

| forward.py | преди 3 години | |

| hungarian_matching.py | преди 3 години | |

| logger.py | преди 3 години | |

| metric.py | преди 3 години | |

| pipeline.png | преди 3 години | |

| test_nkl.py | преди 3 години | |

| test_sel.py | преди 3 години | |

| train.py | преди 3 години | |

| utils.py | преди 3 години | |

README.md

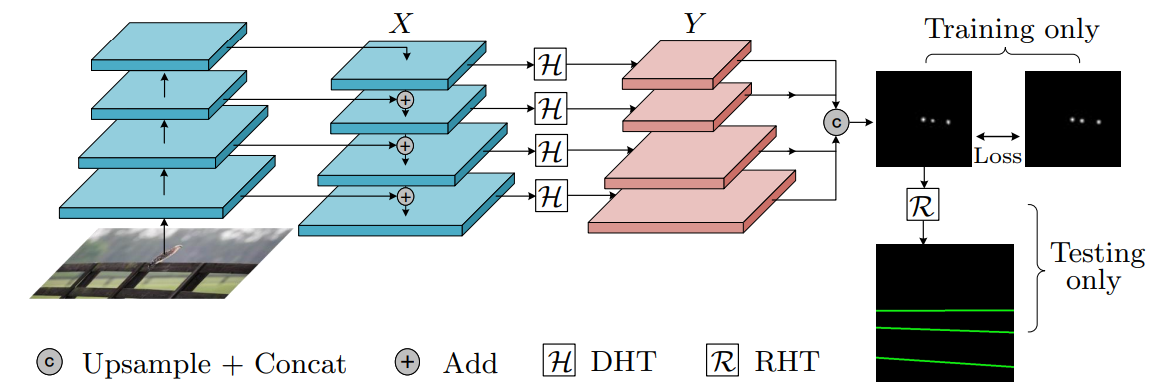

Deep Hough Transform for Semantic Line Detection

Code accompanying the paper "Deep Hough Transform for Semantic Line Detection" (ECCV 2020, PAMI 2021). arXiv2003.04676 | Online Demo | Project page | New dataset | Line Annotator

- Training code is open available now.

- Jittor inference code is open available now.

Deep Hough Transform

Requirements

numpy

scipy

opencv-python

scikit-image

pytorch>=1.0

torchvision

tqdm

yml

POT

deep-hough

To install deep-hough, run the following commands.

cd deep-hough-transform

cd model/_cdht

python setup.py build

python setup.py install --user

Pretrain model (based on ResNet50-FPN): http://data.kaizhao.net/projects/deep-hough-transform/dht_r50_fpn_sel-c9a29d40.pth (SEL dataset) and http://data.kaizhao.net/projects/deep-hough-transform/dht_r50_nkl_d97b97138.pth (NKL dataset / used in online demo)

Prepare training data

Download original SEL dataset from here and extract to data/ directory. After that, the directory structure should be like:

data

├── ICCV2017_JTLEE_gtlines_all

├── ICCV2017_JTLEE_gt_pri_lines_for_test

├── ICCV2017_JTLEE_images

├── prepare_data_JTLEE.py

├── Readme.txt

├── test_idx_1716.txt

└── train_idx_1716.txt

Then run python script to generate parametric space label.

cd deep-hough-transfrom

python data/prepare_data_JTLEE.py --root './data/ICCV2017_JTLEE_images/' --label './data/ICCV2017_JTLEE_gtlines_all' --save-dir './data/training/JTLEE_resize_100_100/' --list './data/training/JTLEE.lst' --prefix 'JTLEE_resize_100_100' --fixsize 400 --numangle 100 --numrho 100

For NKL dataset, you can download the dataset and put it to data dir. Then run python script to generate parametric space label.

cd deep-hough-transform

python data/prepare_data_NKL.py --root './data/NKL' --label './data/NKL' --save-dir './data/training/NKL_resize_100_100' --fixsize 400

Training

Following the default config file 'config.yml', you can arbitrarily modify hyperparameters. Then, run the following command.

python train.py

Forward

Generate visualization results and save coordinates to _.npy file.

CUDA_VISIBLE_DEVICES=0 python forward.py --model (your_best_model.pth) --tmp (your_result_save_dir)

Evaluate

Test the EA-score on SEL dataset. After forwarding the model and get the coordinates files. Run the following command to produce EA-score.

python test_sel.py --pred result/debug/visualize_test/(change to your own path which includes _.npy files) --gt gt_path/include_txt

For NKL dataset, run the follwoing command.

python test_nkl.py --pred result/debug/visualiza_test/(change to your own path which includes _.npy files) --gt gt_path/include_txt

If you want to evaluate on other metrics such as CD and EMD, please change the evaluation function in metric.py

Citation

If our method/dataset are useful to your research, please consider to cite us:

@article{zhao2021deep,

author = {Kai Zhao and Qi Han and Chang-bin Zhang and Jun Xu and Ming-ming Cheng},

title = {Deep Hough Transform for Semantic Line Detection},

journal = {IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI)},

year = {2021},

doi = {10.1109/TPAMI.2021.3077129}

}

@inproceedings{eccv2020line,

title={Deep Hough Transform for Semantic Line Detection},

author={Qi Han and Kai Zhao and Jun Xu and Ming-Ming Cheng},

booktitle={ECCV},

pages={750--766},

year={2020}

}

License

This project is licensed under the Creative Commons NonCommercial (CC BY-NC 3.0) license where only non-commercial usage is allowed. For commercial usage, please contact us.